|

Listen to this article

|

An MIT research team, led by Nataliya Kos’myna, recently published a paper about its Ddog project. It aims to turn a Boston Dynamics Spot quadruped into a basic communicator for people with physical challenges such as ALS, cerebral palsy, and spinal cord injuries.

The project‘s system uses a brain-computer interface (BCI) system including AttentivU. This comes in the form of a pair of wireless glasses with sensors embedded into the frames. These sensors can measure a person’s electroencephalogram (EEG), or brain activity, and electrooculogram, or eye movements.

This research builds on the university‘s Brain Switch, a real-time, closed-loop BCI that allows users to communicate nonverbally and in real time with a caretaker. Kos’myna’s Ddog project extends the application using the same tech stack and infrastructure as Brain Switch.

Spot could fetch items for users

There are 30,000 people living with ALS (amyotrophic lateral sclerosis) in the U.S. today, and an estimated 5,000 new cases are diagnosed each year, according to the National Organization for Rare Disorders. In addition, about 1 million Americans are living with cerebral palsy, according to the Cerebral Palsy Guide.

Many of these people already have or will eventually lose their ability to walk, get themselves dressed, speak, write, or even breathe. While aids for communication do exist, most are eye-gaze devices that allow users to communicate using a computer. There aren’t many systems that allow the user to interact with the world around them.

Ddog’s biggest advantage is its mobility. Spot is fully autonomous. This means that when given simple instructions, it can carry them out without intervention.

Spot is also highly mobile. Its four legs mean that it can go almost anywhere a human can, including up and down slopes and stairs. The robot’s arm accessory allows it perform tasks like delivering groceries, moving a chair, or bringing a book or toy to the user.

The MIT system runs on just two iPhones and a pair of glasses. It doesn’t require sticky electrodes or backpacks, making it much more accessible for everyday use than other aids, said the team.

How Ddog works

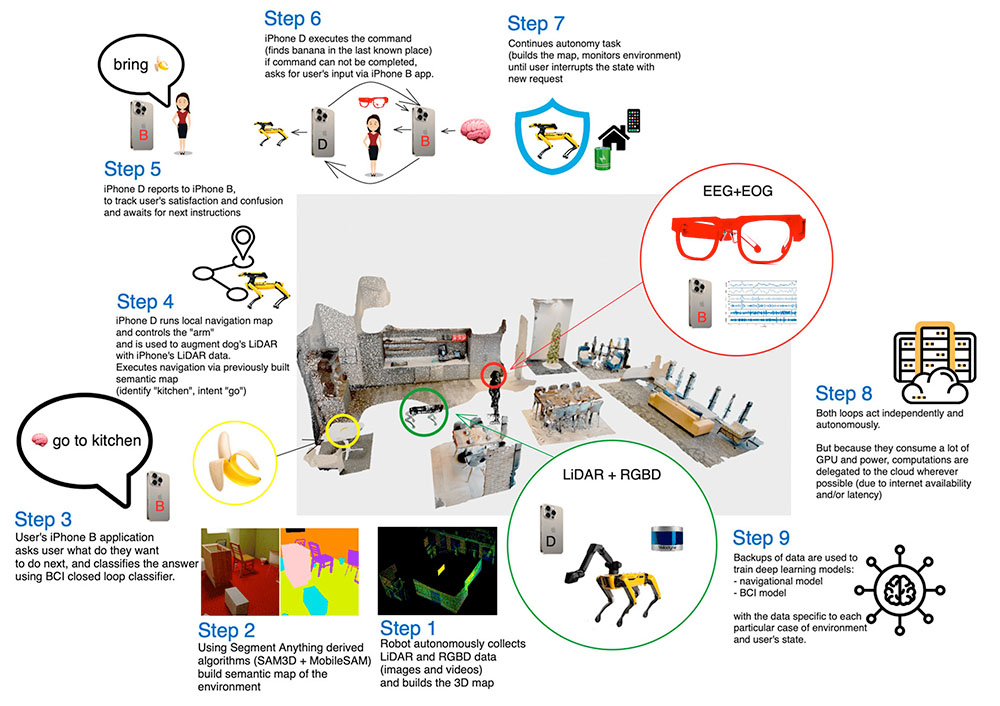

The first thing Spot must do when working with a new user in a new environment is create a 3D map of the world its working within. Next, the first iPhone will prompt the user by asking what they want to do next, and the user will answer by simply thinking of what they want.

The second iPhone runs the local navigation map, controls Spot’s arm, and augments Spot’s lidar with the iPhone’s lidar data. The two iPhones communicate with each other to track Spot’s progress in completing tasks.

The MIT team designed to system to work fully offline or online. The online version has a more advanced set of machine learning models and better fine-tuned models.

An overview of the Project Ddog system. | Source: MIT

Tell Us What You Think!