|

Listen to this article

|

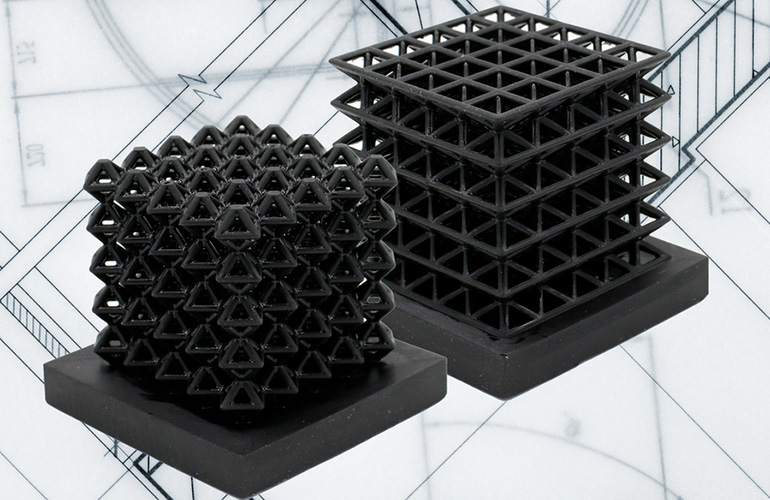

MIT researchers have created a 3D printed material with embedded sensors that can sense how its moving. | Source: MIT/CSAIL

Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have developed programmable materials that can sense their own movements. The team created lattice materials with networks of air-filled channels, which allows researchers to measure changes in air pressure within the channels when the material is being moved or bent.

The lattice structure created by the team is a kind of architected material, meaning when you change the geometry of the features in the material, its mechanical properties, like stiffness or toughness, are altered. For a lattice, the denser the network of cells making up the structure, the stiffer it is.

It’s difficult to integrate sensors into these materials because of the sparse, complex shapes that make them up. Putting sensors outside the structure, however, doesn’t provide enough information to get a complete picture of how the material is deforming or moving.

CSAIL’s team used digital light processing 3D printing to incorporate the air-filled channels into the struts that form the lattice structure of the team’s material. The researchers drew the structure out of a pool of resin and hardened it into a precise shape using projected light. In this method, an image is projected onto the wet resin, and areas struct by the light are cured. Researchers used pressurized air, a vacuum and intricate cleaning to remove any excess resin before it was cured.

When the resulting structure is moved or squeezed, the channels formed by the 3D printing are deformed, causing the volume of air inside to change. The team used an off-the-shelf pressure sensor to measure these changes in pressure and get feedback on how the material is deforming.

The CSAIL team then built off of their results by building sensors into a class of materials developed for motorized soft robotics called handed shearing auxetics (HSAs). HSAs can be twisted or stretched, making them good for soft robotic actuators. Like architected materials, HSAs are difficult to embed sensors into because of their complex structure.

The team ran the sensorized HSA material through a series of movements for over 18 hours, and used the sensor data they gathered to train a neural network to accurately predict the robot’s motion.

In the future, the team hopes its technology could be used to create soft, flexible robots with embedded sensors. These robots could understand their own posture and movements. The CSAIL team also sees potential for their technology to be used to create wearable devices that provide feedback on how the user is moving or interacting with their environment.

The team recently published the results of their study in Science Advances. Daniela Rus, the Andrew and Erna Viterbi Professor of Electrical Engineering and Computer Science and director of CSAIL, was the lead author on the paper. Co-authors included Lillian Chin, a graduate student at MIT CSAIL, Ryan Truby, former CSAIL postdoc and now assistant professor at Northwestern University, and Annan Zhang, CSAIL graduate student.

Tell Us What You Think!