|

Listen to this article

|

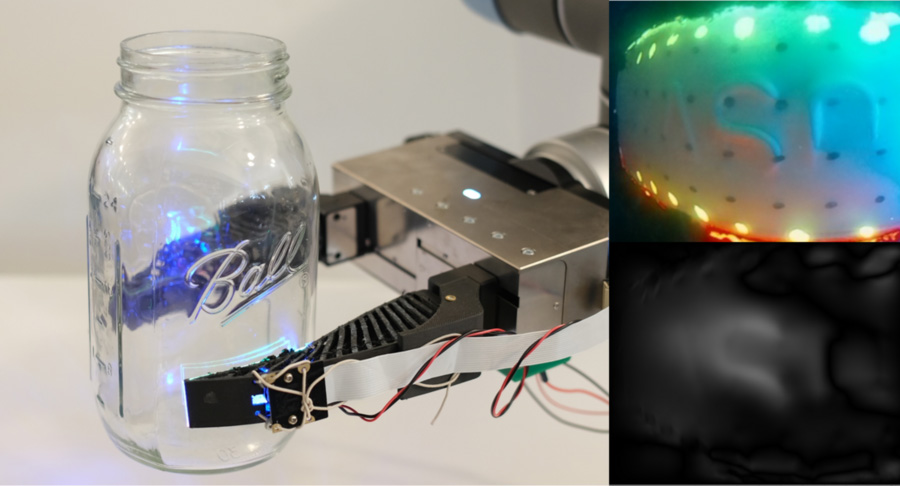

The GelSight fin ray gripper was able to feel the pattern on Mason jars. | Source: CSAIL

A research team at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) has developed a robotic gripper with Fin Ray fingers that are able to feel the objects it manipulates.

The Perceptual Science Group at CSAIL, led by professor Edward Adelson and Sandra Liu, a mechanical engineering PhD student, created touch sensors for their gripper, allowing it to feel with the same or more sensitivity as human skin.

The team’s gripper is made of two Fin Ray fingers. The fingers act similar to a fish’s tail, which will bend towards an applied force rather than away, and are 3D printed from a flexible plastic material. Typical Fin Ray grippers have cross-struts that run through the interior, but the CSAIL team decided to hollow out the interior to make room for their sensory components.

The inside of the gripper is illuminated by LEDs. On one end of the hollowed-out gripper sits a camera mounted to a semi-rigid backing. The camera faces a layer of pads made of a silicone gel called GelSight. The layer of pads is glued to a thin sheet of acrylic material, which is attached to the opposite end of the inner cavity.

The gripper is designed to fold seamlessly around the objects it grips. The camera determines how the silicone and acrylic sheets deform as it touches an object. From these observations, the camera, with computational algorithms, can figure out the general shape of the object, how rough its surface is, its orientation in space and the force being applied by, and imparted to, each finger.

Using this method, the gripper was able to handle a variety of objects, including a mini-screwdriving, a plastic strawberry, an acrylic paint tube, a Ball Mason jar and a wine glass.

While holding these objects, the gripper was able to detect fine details on their surfaces. For example, on the plastic strawberry, the gripper could identify individual seeds on its surface. The fingers could also feel the lettering on the Mason jar, something that vision-based robotics struggle with because of the way glass objects refract light.

Additionally, the gripper could squeeze a paint tube without breaking the container and spilling its contents, and pick up and put down a wine glass. The gripper could sense when the base of the glass touched the tabletop, resulting in proper placement seven out of 10 times.

The team hopes to improve on the sensor by making the fingers stronger. By removing the cross-struts, the team also removed much of the structural integrity, meaning the fingers have a tendency to twist while gripping things. The CSAIL team also want to create a three fingered gripper that could pick up fruits and vegetables and evaluate their ripeness.

The team’s work was presented at the 2022 IEEE 5th International Conference on soft robotics.

Tell Us What You Think!