|

Listen to this article

|

Researchers reported at ICRA that they can vectorize controllers to be available during training and deployment. | Source: NVIDIA

NVIDIA Corp. research teams presented their findings at the IEEE International Conference on Robotics and Automation, or ICRA, last week in Yokohama, Japan. One group, in particular, presented research focusing on geometric fabrics, a popular topic at the event.

In robotics, trained policies, like geometric fabrics, are approximate by nature. This means that while these policies usually do the right thing, sometimes they make a robot move too fast, collide with things, or jerk around. Generally, roboticists can not be certain of everything that might occur.

To counteract this, these trained policies are always deployed with a layer of low-level controllers that intercept the commands from the policy. This is especially true when using reinforcement learning-trained policies on a physical robot, said the team at the NVIDIA Robotics Research Lab in Seattle. These controllers then translate the commands from the policy so they mitigate the limitations of the hardware.

These controllers are run with reinforcement learning (RL) policies during the training phase. It was during this phase that the researchers found that a unique value could be supplied with the GPU-accelerated RL training tools. This value vectorizes those controllers so they’re available during training and deployment.

Out in the real world, companies working on, say, humanoid robots can demonstrate with low-level controllers that balance the robot and keep it from running its arms into its own body.

Researchers draw on past work for current project

The research team built on two previous NVIDIA projects for this current paper. The first was “Geometric Fabrics: Generalizing Classical Mechanics to Capture the Physics of Behavior,” which won a best paper award at last year’s ICRA. The Santa Clara, Calif.-based company‘s team used controllers produced in this project to vectorize.

The in-hand manipulation tasks the researchers address in this year’s paper also come from a well-known line of research on DeXtreme. In this new work, the researchers merged those two lines of research to train DeXtreme policies over the top of vectorized geometric fabric controllers.

NVIDIA’s team said this keeps the robot safer, guides policy learning through the nominal fabric behavior, and systematizes simulation-to-reality (sim2real) training and deployment to get one step closer to using RL tooling in production settings.

From this, the researchers formed a foundational infrastructure that enabled them to quickly iterate to get the domain randomization right during training. This sets them up for successful sim2real deployment.

For example, by iterating quickly between training and deployment, the team reported that it could adjust the fabric structure and add substantial random perturbation forces during training to achieve a higher level or robustness than in previous work.

In prior DeXtreme work, the real-world experiments were extremely hard on the physical robot. It wore down the motors and sensors while changing the behavior of underlying control through the course of experimentation.

At one point, the robot even broke down and started smoking. With geometric fabric controllers underlying the policy and protecting the robot, the researchers found they could be much more liberal in deploying and testing policies without worrying about the robot destroying itself.

NVIDIA presents more research at ICRA

NVIDIA highlighted four other papers its researchers submitted to ICRA this year. They are:

- SynH2R: The researchers behind this paper proposed a framework to generate realistic human grasping motions that can be used for training a robot. With the method, the team could generate synthetic training and testing data with 100 times more objects than previous work. The team said its method is competitive with state-of-the-art methods that rely on real human motion data both in simulation and on a real system.

- Out of Sight, Still in Mind: In this paper, NVIDIA’s researchers tested a robotic arm’s reaction to things it had previously seen but were then occluded. With the team’s approaches, robots can perform multiple challenging tasks, including reasoning with occluded objects, novel objects in appearance, and object reappearance. The company claimed that these approaches outperformed implicit memory baselines.

- Point Cloud World Models: The researchers set up a novel point cloud world model and point cloud-based control policies that were able to improve performance, reduce learning time, and increase robustness for robotic learners.

- SKT-Hang: This team looked at the problem of how to use a robot to hang up a wide variety of objects on different supporting structures. This is a deceptively tricky problem, as there are countless variations in both the shape of objects and the supporting structure poses.

Surgical simulation uses Omniverse

NVIDIA also presented ORBIT-Surgical, a physics-based surgical robot simulation framework with photorealistic rendering powered by NVIDIA Isaac Sim on the NVIDIA Omniverse platform. It uses GPU parallelization to facilitate the study of robot learning to augment human surgical skills.

The framework also enables realistic synthetic data generation for active perception tasks. The researchers demonstrated ORBIT-Surgical sim2real transfer of learned policies onto a physical dVRK robot. They plan to release the underlying simulation application as a free, open-source package upon publication.

In addition, the DefGoalNet paper focuses on shape servoing, a robotic task dedicated to controlling objects to create a specific goal shape.

Partners present their developments at ICRA

NVIDIA partners also showed their latest developments at ICRA. ANYbotics presented a complete software package to grant users access to low-level controls down to the Robot Operating System (ROS).

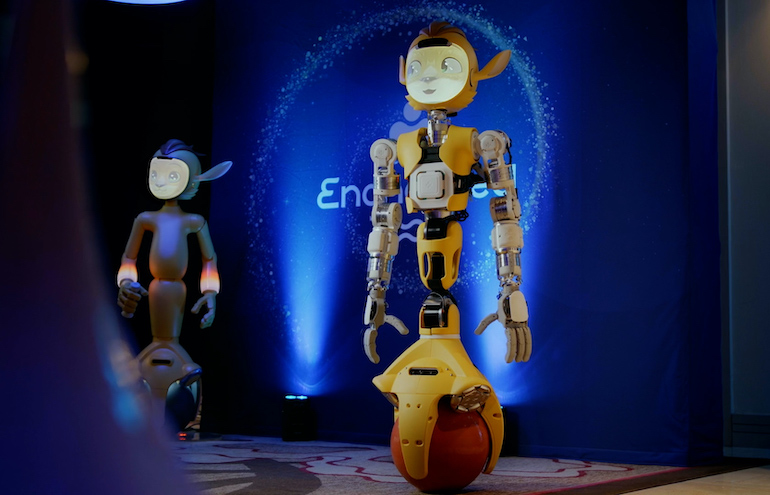

Franka Robotics highlighted its work with NVIDIA Isaac Manipulator, an NVIDIA Jetson-based AI companion to power robot control and the Franka toolbox for Matlab. Enchanted Tools exhibited its Jetson-powered Mirokaï robots.

NVIDIA recently participated in the Robotics Summit & Expo in Boston and the opening of Teradyne Robotics’ new headquarters in Odense, Denmark.

NVIDIA partner Enchanted Tools showed Mirokai at CES and ICRA. Source: Enhanted Tools

Tell Us What You Think!